Jump To Section

In the midst of ever-increasing competition, the need to deliver high-quality products is now more critical than ever.

And while product quality has always been an essential factor in any industry or field, the aftermath of the pandemic has led to shorter attention spans, meaning it only takes a split second for users to develop an opinion about a website and decide whether to wait or switch over to a competitor.

Eventually, the responsibility falls on QA analysts’ shoulders: are they doing enough to achieve a seamless customer experience?

The importance of product quality is not lost on us. But are test automation engineers — or anyone involved with QA — doing enough?

As a way of improving the efficiency of manual testing, Intelligent Automation Testing came as the new (and quite popular) solution — and for the right reasons.

Why Use Automation Testing?

The reason why Automation Testing arose in popularity is evident: it saves time, costs, and the human effort to sift through multiple application screens and manually compare the results of various input combinations.

On top of that, recording each result manually and conducting the tests repeatedly is a hassle that test automation engineers have gladly replaced with AI test automation tools that do the work for them.

To put it precisely, Visual AI in Test Automation is the means to achieve bigger, better, and faster QA. Watch this video on QA Test Automation to take a deeper dive into what automation testing can achieve.

But all said and done, despite the benefits and opportunities that come with automation testing, it seems that there’s still a need for a better, even more intelligent test automation solution.

And the reason why is simple: Automation Testing hasn’t fully delivered on its promise to streamline modern software delivery.

Instead, it comes with its own set of challenges, and even created more bottlenecks. Let’s see how.

Challenges with Automation Testing

Assume you’re working for a mid-sized company that’s still young and undergoing the designing phase of their processes and protocols.

All while simultaneously trying to deliver a good quality product.

Let’s say you’re hired to automate their regression suite to ensure nothing breaks during delivery. Test automation engineers will mostly be seen designing and writing programs that will run the automated tests on the software.

So, you start writing the test cases and immediately identify the lack of best practices followed while developing the product (in this case, the test-ids).

Now you have two options:

- Ask the team to update the code to include the test-ids (which we know will probably never happen), or

- Work with whatever you have.

So you continue writing test cases that, from the get-go, are built to be flaky and possibly flawed.

Fast forward, you have a few tests in the pipeline regularly failing — not due to the actual issues in the product but the use of highly dubious selectors.

As a result, you end up putting in a lot of your effort, time and company resources into maintaining the tests and fixing them while you could’ve spent it trying to attain an ample percentage of test coverage.

Whether you admit it or not, this is what all of us “Automation Testers” have experienced at least once in our careers. It changes our mindset and diverts us from writing a test that would rather ‘Pass’ than ‘Fail’ while finding an actual bug in the product.

Clearly, the amount of maintenance effort required in automation testing takes up more time — and more importantly, distracts you from the true results you want to achieve.

So, what can be done instead?

Enter: Autonomous Testing.

What is Autonomous Testing?

To put it simply, Autonomous Testing is the next, enhanced version of automation testing.

Think about the number of tests you’d have to write for testing each browser in a different OS setting and on many other devices (desktop, mobile, tablets), and then debugging the individual issues.

Autonomous Testing removes the hassle and stress of maintenance so that you can focus on the single most important goal of QA testing automation: the ability to simply pass the test by writing one with ample test coverage, and identifying new bugs while you’re at it.

As the name clearly suggests, if Autonomous Testing is adopted by test automation engineers, they’d be responsible for less manual work like maintenance and debugging (since AI automation testing tools provide the Root cause analysis of the bug, you don’t have to debug manually). This is a win-win situation for everyone involved.

And how does Autonomous Testing offer this capability? It brings Visual AI in test automation.

How Can Artificial Intelligence Be Used in Automation Testing?

Visual AI in test automation offers the ability to identify the root cause of a specific problem, pointing developers to the exact piece of code to be fixed.

It introduces the autonomy that lets test engineers focus their attention and energy on attaining more test coverage rather than on writing hundreds of lines of code to validate just how the website visually looks.

In the past, we have seen some solutions to overcome this bottleneck of visual testing in test automation. These came in the shape of Pixel and DOM differences validation.

But still, there is a downside: both of these have some limitations that don’t translate well into the ideology of Autonomous Testing.

This gap gave birth to Visual AI — and Visual Artificial Intelligence Testing.

What is Visual Artificial Intelligence?

Visual AI is simply artificial intelligence technology being able to see what humans see — and intelligently make visual understanding of what it sees to make decisions and carry out commands accordingly.

And as far as web/app automation testing goes, this is a hugely revolutionizing facility for UI automation testing.

What is Visual UI testing?

In web development, visual UI testing works by running visual tests that detect, analyze, and compare various visual elements of a website or application. By doing so, visual testing tools ensure that the look and feel of the page is as per the design, and whether or not the required element sections are displayed on the page.

Many visual AI testing tools in the industry claim to be the ultimate solution offering visual UI testing, but in my opinion, Applitools and Percy are both at the top, owing to their easy-to-follow starter kits and sufficient documentation found on their websites to help get you started.

In this artificial intelligence testing tutorial, we’ll look at Applitools in motion, and you’ll learn how you can set it up and use it to implement intelligent automation testing on your websites/application for visual UI testing.

Setting Up Visual AI For UI Automation Testing

To conduct UI testing, be it for web or app automation testing, visual AI will prove to be an integral part of the process.

To integrate Visual AI in test automation, you want to use automation testing tools that can seamlessly adapt and work with your existing QA testing automation framework.

In this tutorial, we’ll be using Applitools with Cypress to demonstrate how to implement AI in testing.

(Note: Applitools supports nearly every other popular front-end testing framework. You can find one that you prefer here).

For the sake of this tutorial, we’ll be working with a Cypress framework, assuming you have it and know how it works. (If you don’t, let us know and we’ll help you set it up.)

Let’s get right into it.

Step 1

Install & setup Applitools using the following commands:

$ npm install @applitools/eyes-cypress

$ npx eyes-setup

Step 2

Add the global configuration for your visual tests in the root folder:

applitools.config.js

module.exports = {

testConcurrency: 1,

apiKey: 'APPLITOOLS_API_KEY',

browser: [

// Other browsers are also available.

{width: 800, height: 600, name: 'chrome'},

{width: 1600, height: 1200, name: 'firefox'},

{width: 1024, height: 768, name: 'safari'},

// Other mobile devices are available, including iOS.

{deviceName: 'Pixel 2', screenOrientation: 'portrait'},

{deviceName: 'Nexus 10', screenOrientation: 'landscape'},

]

}

Step 3

First, follow the steps to find your Applitools API key and set it as environment variable APPLITOOLS_API_KEY before running the visual test.

There are two options: either set it through your IDE or use the following command:

For Mac & Unix:

$ export APPLITOOLS_API_KEY=<your-api-key>For Windows:

> set APPLITOOLS_API_KEY=<your-api-key>Step 4

Design your test.

demo.cy.js

/// <reference types="cypress" />

describe('Visual AI Testing', () => {

beforeEach(() => {

// Open Eyes to start visual testing.

// Each test should open its own Eyes for its snapshots.

cy.eyesOpen({

appName: 'mobileLIVE',

testName: Cypress.currentTest.title,

})

})

it('should validate the contact-us page', () => {

// Load the page.

cy.visit('https://www.mobilelive.ca/contact-us#offices')

// Verify the full contact-us page is loaded correctly.

cy.eyesCheckWindow({

tag: "Contact-us",

target: 'window',

fully: true

});

})

afterEach(() => {

// Close Eyes to tell the server it should display the results.

cy.eyesClose()

})

})

Replace the URL in cy.visit() with your web-site/page.

Step 5

Execute the sample test using the following command:

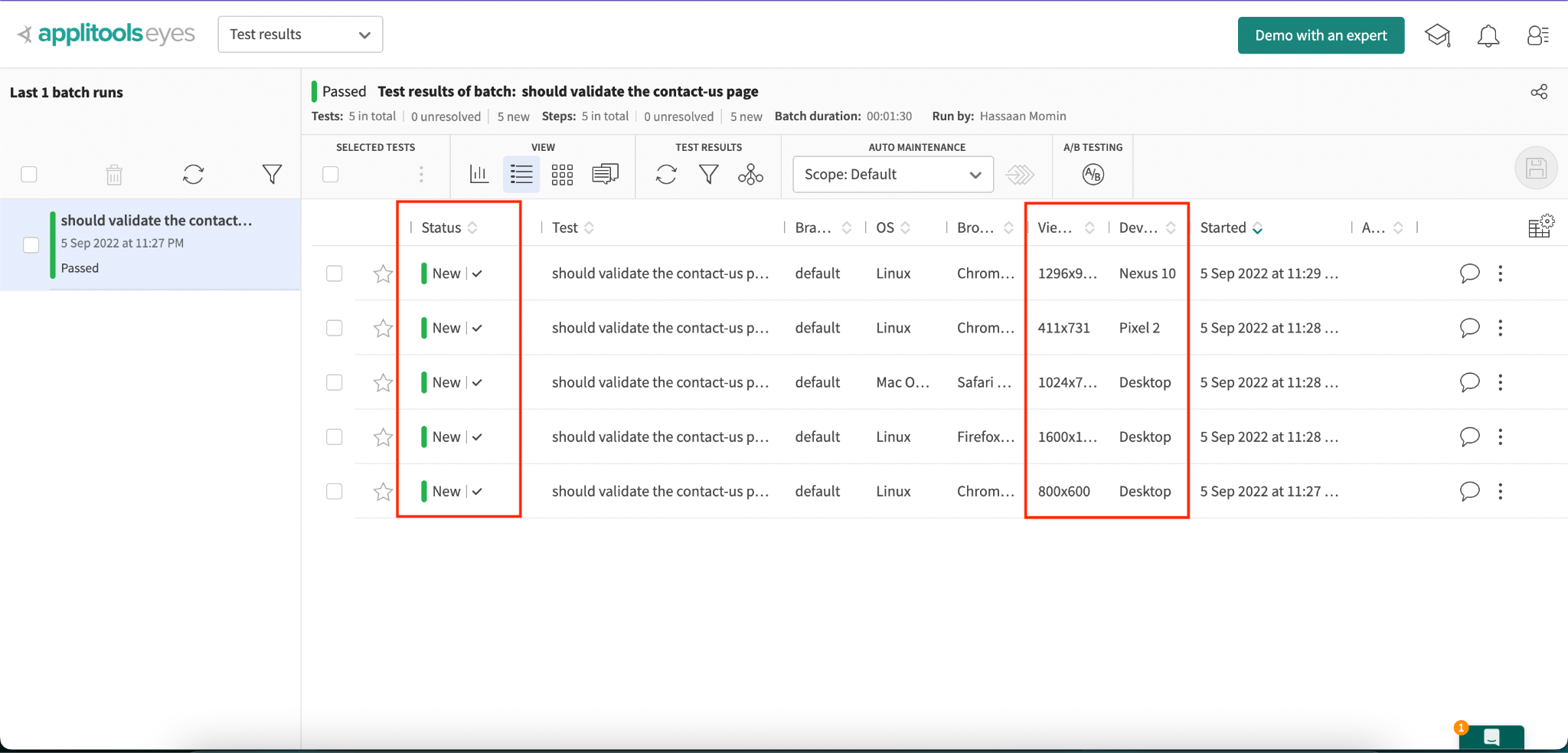

$ npx cypress run --spec cypress/e2e/demo.cy.jsAfter your tests run, you should see results in the Applitools Eyes Test Manager dashboard, which will look something like this:

- The first highlighted column displays the status, and as this is the first build, there is nothing there to compare it with — hence the status “New”.

- The second column highlights all the devices and viewports we globally set for our visual tests in Step 2.

In terms of setting up the visual artificial intelligence in test automation too, this is pretty much all of it.

But let’s rerun the same test and see what happens.

Execute the same “run” command again.

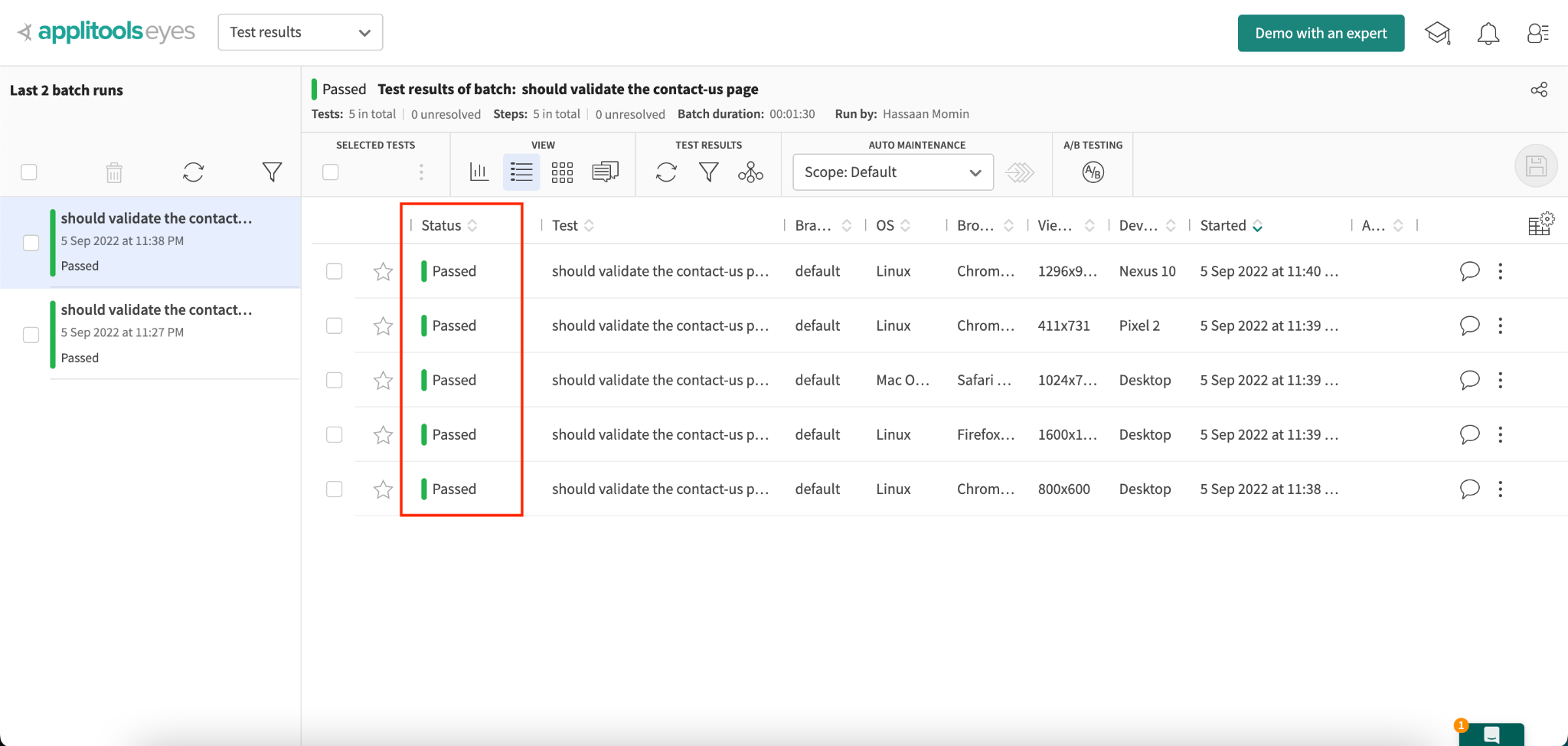

This time, the dashboard will display this:

The tests have the status ‘Passed’ because there is no visual change between the latest web page and the baseline saved in the last build.

Now let’s see what happens in the case of a visual change.

Add the following code to your test file demo.cy.js after the cy.visit() command.

cy.get('[id="form-field-firstname"]').type('Testing')Save and rerun the test. The dashboard will now look like this:

This time, since there was a visual change, the initial status was ‘Unresolved’ as it wasn’t sure if the changes detected were expected. Therefore, it lets users decide for the first time whether to pass or fail the build.

Based on your response, the baseline image will be updated. In addition to this, you can:

- View the root-cause analysis for the identified difference

- Create a bug

- Add a remark

- Toggle between baseline & current build

- Highlight the identified difference

- Specify a portion of the page that will be ignored and won’t be checked for any visual changes

And the list continues..

From this point onwards, it’s just about adding more and more layers to the AI-based test automation process based on your preferences; add the visual tests in your CI/CD pipeline or integrate Slack to get test results instantly — the world is your oyster.

Takeaway

While we do have cutting-edge ML/AI technologies weaving their way into the autonomous software testing and development process, independently, they’re still not enough.

There is still a pressing need to implement best practices, introduce formulated processes, and educate the community accordingly to utilize and understand the real power of using Visual AI in test automation.

Revolutionary methods and approaches like Hyper-Automation (which also largely uses AI to automate multiple IT processes) are bound to be more beneficial than risky as we move into the future. To learn how you can overcome E2E complexity with Hyper-Automation, watch this video here.

But what can be said with a lot of certainty is that Autonomous Testing is the future we’re looking at — and it only makes sense to start adapting these trends today that will be the new norms tomorrow.